V-Factory: Autonomous Content Infrastructure for High-Volume Video Production

Cloud-native infrastructure that turns signals into platform-ready short-form output — end-to-end. Built around a multimodal quality gate (VQS) and a closed-loop learning system that improves every batch.

Not a “video tool”. A cloud-native factory with multimodal QA and a learning loop.

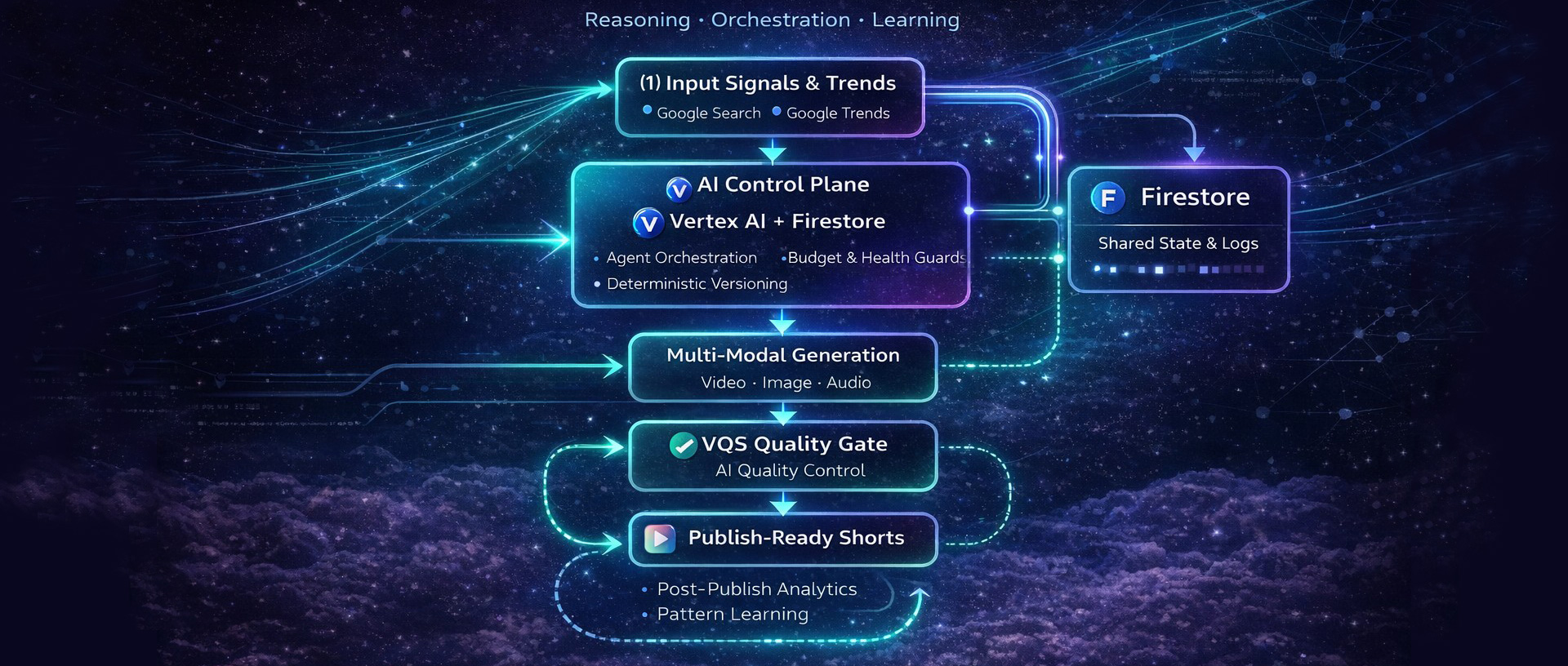

Signals / Trends / Inputs ↓ Gemini (Multimodal Reasoning) ↓ AI Control Plane (Vertex AI + Firestore) ↓ Multi-Modal Generation (video · image · audio) ↓ VQS Gate (AI Quality Control) ↓ Platform-Ready Shorts ↺ Analytics → Learning

Short-form production is costly, manual, and inconsistent. Quality drifts, iterations are slow, and performance learnings rarely feed back into the process.

High cost

Human-dependent production makes unit economics unpredictable.

Manual QA bottleneck

Most pipelines fail on consistency and late-stage “drift”.

No feedback loop

Retention data rarely improves prompts and structure systematically.

Video Factory automates the full lifecycle: signal → concept → script → generation → quality audit → assembly. Designed for batch throughput and repeatable short-form output.

VQS — Video Quality System

Frame-level AI audit for consistency, plausibility, text/logo integrity, and scenario alignment.

Temporal Precision

Hook-first pacing optimized for Shorts/Reels retention. Structure tuned for short-form behavior.

Self-Learning Loop

Performance feedback improves prompts, model selection, and scenario structure batch-to-batch.

We are currently in the dogfooding phase, running our own 24/7 channels to stress-test the pipeline. This lets us refine VQS behavior and budget/health guards using real performance signals — not guesses.

Real workloads

Continuous batches validate reliability, retries, and cost controls under pressure.

VQS tuned by data

Quality rules evolve from observed failure modes (drift, artifacts, unreadable text).

Closed-loop learning

Analytics feed back into prompts, pacing, and model routing for the next batch.

No vanity testimonials — just measurable pipeline behavior.

Autonomous assembly

Zero-human intervention from batch start to platform-ready output (in normal runs).

Fast VQS audit

Target: multimodal QA under 15 seconds per clip (config-dependent).

Consistency drift guard

VQS rejects late-stage anomalies and triggers deterministic retries.

A clear path from prototype validation to closed-loop learning and multi-language expansion.

Phase 1 — Infrastructure & VQS (Current)

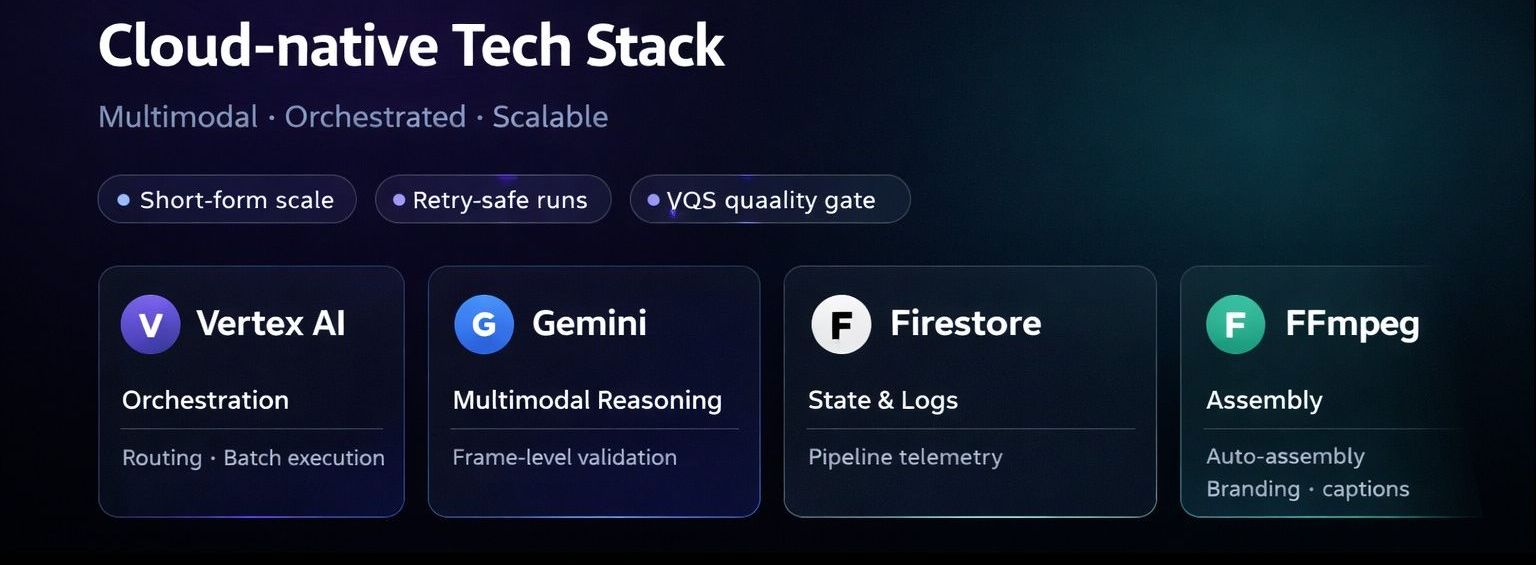

Cloud-native execution + VQS quality gate + retry-safe batch runs.

Phase 2 — Closed-loop learning

Integrate TikTok/YouTube analytics into prompt & routing logic (e.g., via BigQuery).

Phase 3 — Multi-language scaling

Localization + cross-platform templates for global distribution.

Flow

Raw Data / Trend Signals ↓ Intelligence Layer (patterns, candidates) ↓ AI Control Plane (Vertex AI + Firestore) ↓ Multi-Modal Generation (video · image · audio) ↓ VQS Gate (multimodal QA) ↓ Platform-Ready Shorts/Reels/TikTok

VQS acts as the quality gate that prevents late-stage drift and enforces scenario intent.

What we validate (VQS)

• Visual consistency & character identity

• Physical plausibility & anomaly detection

• Text/logo readability (when present)

• Alignment with script & scene intent

• Hook integrity in first seconds

A mobile-friendly stack visual that explains the control plane and feedback loop at a glance.

We use multimodal reasoning for frame-level validation and scalable orchestration for reliable pipeline runs.

V-Factory is developed and operated by a verified EU-based technology enterprise with 4+ years of experience in software engineering and IT infrastructure. We operate as a lean AI lab focused on high-performance automation and proprietary R&D in multimodal AI systems.

Entity: HELPLINE Alexandr Razancav · NIP: 5372660511

Registration: Central Register and Information on Economic Activity (CEIDG), Poland · Status: Active (since 2021), Enterprise Software Development (PKD 62.01.Z).

V-Factory is led by a CTO-level architect with a track record of designing high-availability systems, including YouTube data analytics engines and complex secure cloud infrastructures (Digital Identity & Immortality projects). Our core technology is built on enterprise-grade patterns (Standard/Enterprise Firestore Connectors v2.0) that ensure 100% data integrity and system resilience.

Want early access or partnership? Drop a message — we’ll reply fast.